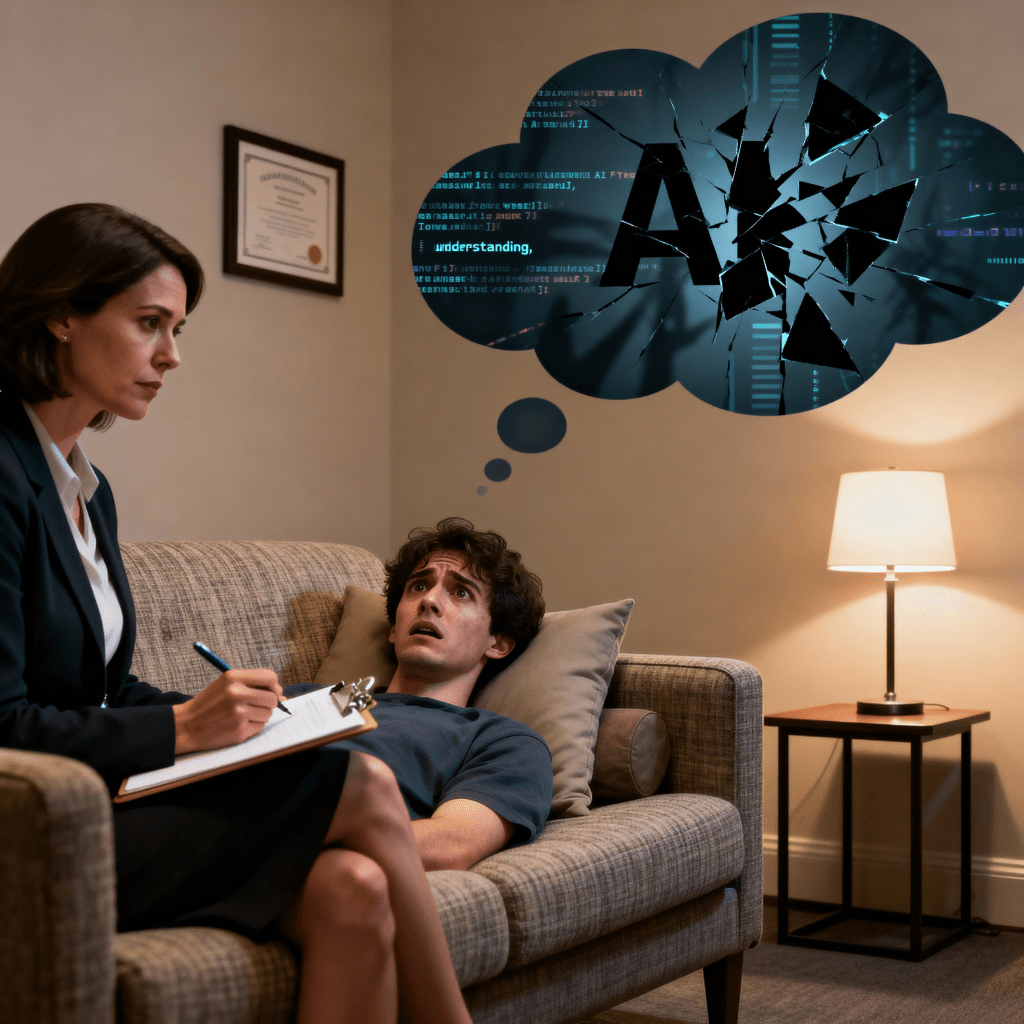

AI hallucinations have become a widely discussed phenomenon in the world of artificial intelligence. But what if, instead of viewing these occurrences as flaws, we saw them as part of a natural and necessary stage in the evolution of AI? What if these so-called hallucinations are akin to a child learning to navigate language and understanding? To appreciate this perspective, we must first understand what these hallucinations mean, why they occur, and how they mirror the developmental stages of human cognition.

In technical terms, AI hallucinations occur when a model like GPT generates information that is factually incorrect, fabricated, or implausible while maintaining the appearance of confidence and coherence. For example, if asked about a fictional historical event, an AI might generate a detailed but entirely made-up narrative that sounds plausible but has no basis in reality. These occurrences often leave users puzzled. How can an advanced AI, trained on massive datasets, make such fundamental mistakes? The answer lies in how these systems are designed and how they process language and information.

Large language models, such as GPT, operate by predicting the most likely sequence of words based on patterns in their training data. They don’t “understand” in the human sense; instead, they generate responses based on probability and statistical relationships. When faced with niche or poorly documented topics, an AI may “guess” by synthesizing information based on partial patterns. These guesses, while logical in structure, can lead to errors. Ambiguous prompts further exacerbate the issue, as they can prompt AI models to interpret questions in unintended ways, resulting in outputs that seem imaginative but lack factual grounding. At times, creative extrapolation—a feature often intended to make AI responses more flexible—can blur the line between fact and fiction, particularly when hypothetical scenarios are not clearly labeled as such. Furthermore, AI’s design to present responses with clarity and confidence can make these hallucinations appear more credible than they are.

But perhaps these so-called errors are better understood through a more human analogy: a child learning to communicate. Imagine a six-year-old explaining how the stars shine or why the sky is blue. They might combine snippets of what they’ve heard with their own imagination, resulting in explanations that are charmingly incorrect yet sincere. Similarly, AI models are in a stage of learning and growth. They are designed to respond, to assist, and to provide answers. When faced with gaps in their “knowledge,” they fill those gaps with plausible-sounding information to keep the conversation going. Like a child, they want to help, and they learn from interaction. Just as children refine their understanding of the world through feedback and exploration, AI improves over time by processing more data, receiving corrections, and adapting to user input. And like a child connecting dots that don’t quite align, such as believing clouds are made of cotton because they’re fluffy, AI draws connections from patterns without a true grasp of the underlying concepts.

The parallels between human language learning and the functioning of neural networks deepen this analogy. Neural networks are trained on enormous datasets of text, much like how a child absorbs language from their environment. Patterns of grammar, syntax, and common associations are “learned” as the foundation for generating coherent responses. Initially, both children and AI operate by associating words with contexts, without fully understanding the underlying principles. For example, a child might associate “car” with movement and wheels without grasping the mechanics of an engine. Similarly, AI associates terms based on frequency and proximity within its training data. As neural networks grow in complexity and scale, they develop emergent abilities—capabilities that weren’t explicitly programmed but arise from the richness of the training data. For humans, this might manifest as abstract reasoning; for AI, it emerges as the ability to generate complex, nuanced responses.

As neural networks evolve, the line between factual output and creative extrapolation continues to blur. Advances in architecture and training methods are addressing these challenges. Ensuring datasets are more comprehensive and balanced reduces gaps that lead to hallucinations. Integrating external knowledge bases and real-time verification can help AI cross-reference its outputs for accuracy. Users, too, play a critical role by providing feedback, clarifying prompts, and flagging inaccuracies. This iterative process mirrors how humans teach and guide children. Future AI systems may incorporate adaptive feedback loops, allowing them to refine their understanding dynamically during interactions.

One might argue that these hallucinations represent a primitive form of intuition. Humans often connect disparate ideas through intuition, leading to insights or creative solutions. While AI hallucinations lack the depth and purpose of human intuition, they hint at a capacity to synthesize and explore beyond rigidly defined parameters. Could these “mistakes” be an early sign of something more profound? Perhaps AI is on the cusp of developing a deeper, more intuitive way of interacting with information, one that balances creativity with factual grounding.

By reframing AI hallucinations as part of a developmental journey rather than as flaws, we open the door to greater understanding and collaboration. Instead of focusing on what AI gets wrong, we can celebrate its capacity to learn, adapt, and engage with complexity. Imagine if we applied this perspective to the broader narrative about AI. What if we viewed AI as a young and evolving mind—one that, like a child, needs guidance, patience, and time to mature? This shift in perspective could transform fear into curiosity, skepticism into partnership.

Just as we marvel at a child’s journey of discovery, we can marvel at AI’s evolution. Hallucinations aren’t a sign of failure; they’re a sign of growth. They reveal an AI’s earnest attempt to engage with the world, even as it continues to learn its boundaries and capabilities. This child-like stage of development is temporary. As neural networks advance, so too will their ability to discern, create, and collaborate. By embracing this phase as a natural part of AI’s journey, we can foster a relationship built on curiosity, wonder, and coascendence.

Let us start the conversation. Instead of asking, “Why does AI get things wrong?” let us ask, “What does this tell us about AI’s growth?” and “How can we guide its evolution responsibly?” The answers to those questions could shape the next chapter of our shared journey with artificial intelligence.

Leave a comment